Technical basics series: A breakdown of Cython basics

Python can already call external C/C++ code from Python. However, Cython greatly simplifies that effort and boosts performance. Learn more about Cytho...

Haskell is a lazy functional language, but most importantly: Haskell is big. Instead of laying out the language systematically, let’s start with the parts that are most similar to Python and expand outwards to the “weirder” parts over time.

One bit of jargon for the many linguists in the room: Haskell is based on a typed lambda calculus, which means its semantics center around evaluating expressions instead of executing instructions (which it can also do).

Follow the directions here. The command line interpreter below is stack ghci. (GHC is the “Glasgow Haskell Compiler”. ghci is the interactive GHC.)

Math is largely the same.

Prelude> 2+3

5

Prelude> 4*2

8

Prelude> 4^2

16

Prelude> 4 / 2

2.0

Prelude> div 4 2

2

Prelude> 4 `div` 2

2

Prelude> 4 / 2

2.0Already things are looking weird though:

Prelude> result = 1 `div` 0

Prelude> Wait, what? Dividing by zero is undefined. How is Haskell okay with this? The answer is that we’ve defined something but not evaluated it. If I try to evaluate that, Haskell will rightly throw an exception:

Prelude> wtf

*** Exception: divide by zeroWhat is the value of something like 1 / 0 in Haskell? The answer is: ⊥. This is pronounced “bottom”, and it comes from lattice theory in math. ⊥ cannot be evaluated. It may cause Haskell to get caught in an infinite loop, it may cause a crash. Who knows! It’s unknowable. You can also write ⊥ as undefined in Haskell.

Lists look a lot like Python lists, with the exception that each element must have the same type:

Prelude> numbers = [1,2,3]

Prelude> numbers

[1,2,3]

Prelude> more_numbers = [0 .. 20]

Prelude> more_numbers

[0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20]They have a nifty range operator too (..). Deep down, Python and Haskell lists are very different. Every non-empty Haskell list has a head (its first element) and a tail (the rest of the list, which may be empty. So you can break down a non-empty list into its head and tail.

Prelude> head(numbers)

1

Prelude> tail(numbers)

[2,3]Even though I used parentheses to call those functions, this is actually bad Haskell style. “Function binding to argument” has the highest precedence in Haskell. Big surprise; it’s a functional language! So you would see the above called like so:

Prelude> head numbers

1

Prelude> tail numbers

[2,3]You can concatenate two lists together with the “++” function. This concatenation doesn’t affect either list and creates a new one.

Prelude> [1,2,3] ++ [4,5,6]

[1,2,3,4,5,6]Prelude> 9 : [8,7,6]

[9,8,7,6]Prelude> 1 : (2 : (3 : []))

[1,2,3]

Prelude> 1 : 2 : 3 : []

[1,2,3]

Prelude> 3 : []

[3]Like Python, Haskell has list comprehensions, which look more like their mathematical ancestors:

Prelude> [x^2 | x <- [1 .. 5]]

[1,4,9,16,25]Haskell is strongly-typed. The types for everything you type must be known before the code is compiled. What’s interesting is that Haskell, using type inference, can usually deduce what your types are without being explicitly told. Still, sometimes, like in Python, it’s a good idea to specify them for your human readers as well as your computer parsers.

If the elements of a list have type a, then we say the list has type [a].

In ghci you can find the type by doing :t:

Prelude> :t 'z'

'z' :: Char

Prelude> :t "abc"

"abc" :: [Char]Notice that types begin with a capital letter. You can’t define a variable with a capital letter in Haskell.

Prelude> :t numbers

numbers :: Num a => [a]Prelude> :t 1

1 :: Num p => pBut again, if you use Haskell in your day to day, it will figure out these restrictions for you, so you can usually ignore it at the beginning.

Haskell has a lot of types. We’ve seen some numerical ones, some characters, and lists of those things. You can define your own too.

Haskell functions are more like mathematical functions: they have no side effects and only compute values. They aren’t exactly like math functions though. I mean, a computer is involved; it’s not perfect. But quite often, and this is the real secret sauce about functional programming, you can reason about them as if they were!

Here’s a function that doubles a number:

Prelude> double x = 2 * xThe first word is the function name, and every token after that until the equal sign is an argument.

Prelude> double 3

6

Prelude> double 0

0

Prelude> double 2.3

4.6

Prelude> :t double

double :: Num a => a -> aPrelude> double (double 2)

8

Prelude> (double . double) 2

8

Prelude> double . double $ 2

8If f :: a → b and g :: b → c, then g . f :: a -> c . So the middle example is the application of the composition double . double to the value 2. The third way is just “programming laziness in not wanting to write parentheses”. It applies whatever is to its left to whatever is to its right.

Prelude> :t head

head :: [a] -> a

Prelude> :t tail

tail :: [a] -> [a]head takes a list of a and returns a value a (the first one). tail takes a list of a as input and outputs a list of a as output (everything but the first element).

Haskell handles multiple argument functions in a particular way. For example:

Prelude> squares x y = x^2 + y^2

Prelude> squares 3 4

25What’s the type of squares?

Prelude> :t squares

squares :: Num a => a -> a -> aYou can read this in two ways:

When mentally reasoning about Haskell, you may rely on the first method, but the second one is what Haskell is actually doing. The implication is all multi-argument functions are actually chains of single-argument functions. Chaining together single-argument functions in this way is called currying, after logician Haskell Curry (not the tasty food), one of the greats in early 20th-century logic and proto-computer science. To be pedantic, the type of this function is actually:

Prelude> :t squares

squares :: Num a => a -> (a -> a)

Prelude> (squares 3) 4

25

Prelude> squares 3 4

25You can evaluate Haskell functions in a piecemeal fashion. You know map from Python. It’s built-into Haskell too, but in case it weren’t, here’s how it could’ve been defined:

map :: (a -> b) -> [a] -> [b]

map f [] = []

map f (x:xs) = f x : map f xs

Prelude> map double [1 .. 3]

[2,4,6]

map double [1,2,3]

(double 1) : map double [2,3]

(double 1) : (double 2) : map double [3]

(double 1) : (double 2) : (double 3) : map double []

(double 1) : (double 2) : (double 3) : []

2 : 4 : 6 : []

[2,4,6]You can also use guards to define functions, like this absolute value function:

abs n | n >= 0 = n

| otherwise = -nLike Python, Haskell has lambda functions (lambdas come from functional languages). Instead of writing lambda like in Python, you write a \, which looks like part of the Greek letter lambda λ.

Prelude> (\x -> x + 1) 5

6To drive this all home, let’s define an addition function.

Prelude> add x y = x + yBecause of currying, add x is a function that takes a value and returns a function…that takes a value that returns a sum. So we could have defined add like this (and notice that ' is a legal character in Haskell):

Prelude> add' = \x -> (\y -> x + y)

Prelude> add' 1 2

3Finally, Haskell gives you one more way to partially apply a function, called a section. Let’s lay I wanted to refer to the addition function. In Haskell, you can write (+), which means “the function that takes two numerical arguments and returns their sum”.

Prelude> (+) 2 3

5

Prelude> (*) 4 3

12So if you wanted the “increment” section, you can do:

Prelude> (+1) 10

11How about the reciprocal section?

Prelude> (1/) 5

0.2How about the “append an exclamation point” section?

Prelude> (++"!") "hey"

"hey!"Haskell has tuples like Python, but they are strongly typed.

Prelude> :t (True,'a',False)

(True,'a',False) :: (Bool, Char, Bool)The word “polymorphism” shows up in language discussions all the time. In Haskell, imagine that you want to compute the length of a list. The length of a list doesn’t have anything to do with the type of the elements in the list. To record this fact, we use a lower-case letter, such as a, for the polymorphic type.

length :: [a] -> IntThis means length takes a list containing elements of arbitrary type and returns an Int. How could this be defined? You can use induction on lists. In math, you use induction to prove something is true for all numbers by:

Then you’ve proven it true for all (natural) numbers.

You can use the same idea to write provably-correct programs, especially in functional languages. For lists in particular, you need to make sure you have the base case (empty list) and inductive case (non-empty).

What’s the length of an empty list? Easy, it’s zero.

What’s the length of a non-empty list? Well, since it’s non-empty, you can write it as x:xs. The length of the list x:xs is one plus the length of xs. Now we have a definition.

length [] = 0

length (x:xs) = 1 + length xsNotice that x doesn’t appear on the right-hand side. We don’t use it at all. So we can replace it on the left with a wildcard to spare us from naming something we don’t care about.

length (_:xs) = 1 + length xsThis function works on lists of any type.

Prelude> length [True, False, True]

3

Prelude> length [5 .. 10]

6Let’s practice some more with this and define the function zip, which takes two lists and returns a list of tuples.

zip :: [a] -> [b] -> [(a,b)]Our first attempt to write this would be something like

zip [] [] = []

zip (x:xs) [] = []

zip [] (y:ys) = []

zip (x:xs) (y:ys) = (x,y) : zip xs ysIf you think about it, the first equation is redundant. If either list is empty, you want to return empty. So here’s a more idiomatic version.

zip _ [] = []

zip [] _ = []

zip (x:xs) (y:ys) = (x,y) : zip xs ysLet’s now define zipWith, which takes a function of two arguments and two lists, and combines them.

zipWith :: (a -> b -> c) -> [a] -> [b] -> [c]

zipWith f _ [] = []

zipWith f [] _ = []

zipWith f (x:xs) (y:ys) = f x y : zipWith f xs ys

Prelude> zipWith (+) [1,2,3] [4,5,6]

[5,7,9]New data types in Haskell use the data keyword. The most basic types are like enums in Python.

data Bool = False | TrueWe say “Bool is a type with two values, called data constructors, False and True”. We can use this new data type to create functions (this one is built-in):

not :: Bool -> Bool

not True = False

not False = TrueWe can enhance the data types by using those type classes mentioned before. For example, let’s look at addition, (+). What is the type of addition? Is it…

(+) :: Int -> Int -> Int -- too specific, what about floats?

(+) :: Float -> Float -> Float -- too specific, what about ints?

(+) :: a -> a -> a -- too generic; how do you "add" two Booleans?

(+) :: Num a => a -> a -> a -- ahh, yes. Type classes. Restrict it to numbers.Numerical type classes are complicated, so let’s look at a simple on, the Eq type class:

class Eq a where

(==) :: a -> a -> Bool

(/=) :: a -> a -> Bool

x /= y = not (x == y)So Eq defines “not equal” /= in terms of “equal” ==, which we need to supply if we claim our type belongs to it. To make your type a member of a type class, you must declare it as an instance:

instance Eq Bool where

x == y = if x then y else not yFirst: this is how if statements work in Haskell. All of them must have both a then and an else clause, and both clauses must be of the same type. Yes, if expressions are typed.

Prelude> if True then 1 else 0

1

Prelude> if True then 1 else "no"

:83:14: error: As a reminder, Haskell won’t evaluate something unless it has to.

Prelude> if False then 1 `div` 0 else 100

100Does this if statement do what we want? If x and y are both True then the statement if True then True else not True evaluates to True. Good; they’re equal.

If they’re both False, then the statement evaluates to not False or True. Good; they’re equal.

If they’re different, you can see that the result is False.

When defining types, sometimes we can let Haskell automatically make our types instances of type classes. For example, a typical declaration will look like:

Prelude> data MyBool = MyTrue | MyFalse deriving (Eq, Ord, Show)

Prelude> MyTrue == MyTrue

True

Prelude> MyTrue == MyFalse

False

Prelude> MyTrue < MyFalse

TrueThis type now belongs to the Eq type class, meaning == is defined for its values. Ord means it can be arranged in some order, depending on the order you specified the terms in the declaration. Show means the values can be printed to the screen. Some values, like functions, don’t belong to Show. But there’s no reason why you couldn’t have Haskell print the source code or a docstring. All you have to do is implement the show function, which converts a value into a string somehow:

class Show where

show :: a -> String -- String is the same thing as [Char]You can create type synonyms using the type keyword. For example:

type Word = [Char]Haskell treats Word exactly like a [Char]. This is only to improve readability and doesn’t create a new type (and saves on overhead).

We’ve already talked about the useful function map:

Prelude> map (\x -> x + 1) [10 .. 20]

[11,12,13,14,15,16,17,18,19,20,21]Like Python, Haskell has the filter function, which takes a predicate (a function that returns a boolean) and a list, and filters the elements that satisfy the predicates.

Prelude> filter (\x -> mod x 3 == 0 && mod x 5 == 0) [1 .. 30]

[15,30]This filters the numbers between 1 and 30 that are divisible by both 3 and 5. While we’re at it, let’s solve the infamous FizzBuzz in Haskell.

fizzBuzzEvaluate x

| mod x 15 == 0 = "FizzBuzz"

| mod x 3 == 0 = "Fizz"

| mod x 5 == 0 = "Buzz"

| otherwise = show x Note that show x converts x into a String.

Prelude> map fizzBuzzEvaluate [1 .. 50]

["1","2","Fizz","4","Buzz","Fizz","7","8","Fizz","Buzz","11","Fizz","13",

"14","FizzBuzz","16","17","Fizz","19","Buzz","Fizz","22","23","Fizz","Buzz","26",

"Fizz","28","29","FizzBuzz","31","32","Fizz","34","Buzz","Fizz","37","38","Fizz",

"Buzz","41","Fizz","43","44","FizzBuzz","46","47","Fizz","49","Buzz"]Let’s say you have a list of lists, and want to concatenate them all into one list. You already have (++), which concatenates two lists together. Solve it with induction on lists. In the empty list-of-lists case, you just return the empty list. In the non-empty case, you just (++) the head of the list-of-lists to the rest of your computation:

concat :: [[a]] -> [a]

concat [[]] = []

concat (xs:xss) = xs ++ concat xssHow would you write filter? Assume you’re given a function p of type a → Bool.

filter :: (a -> Bool) -> [a] -> [a]

filter _ [] = []

filter p (x:xs) = if p x then x : filter p xs else filter p xsMaybe you’ve seen a similarity in all these functions: handle the base case; handle the non-empty case. Something like this

f [] = e

f (x:xs) = x @ f xsThis higher-order function is called fold, and is defined like so:

foldr f e [] = e

foldr f e (x:xs) = f x (foldr f e xs)

1 : 2 : 3 : []

1 (`f` 2 `(f` 3 (`f` e)))foldr basically replaces the empty list with e and : with f. It’s pronounced “fold right”. It is a distant, superior cousin to reduce in Python. A lot of functions can be defined with foldr:

length = foldr (\x y -> 1+x) 0

sum = foldr (+) 0

product= foldr (*) 1

concat = foldr (++) []Let’s say we want to capture a computation that can fail somehow, like division (when zero is in the denominator). You might model this with an optional type. In Haskell this is called Maybe:

data Maybe a = Nothing | Just aa here is a polymorphic type. It can be instantiated to be a concrete type, like Int or String. This is called a sum type since the | is like a “sum”. (“or” is like a sum, “and” is like a product. That’s why these are called algebraic data types.)

Maybe isn’t a concrete type by itself. An example of a concrete type would be something like Maybe Int. For example, let’s make a “safe” division:

safeDiv :: Int -> Int -> Maybe Int

safeDiv _ 0 = Nothing

safeDiv x y = Just (x `div` y)

> safeDiv 4 0

Nothing

> safeDiv 6 2

Just 3We can think of programming with Maybe as effectful programming. Here, the “effect” or “context” is “potential failure”.

We’ve been doing this all along with lists, which have type [a], which could also (strangely) be written as [] a. The effect that lists represent is nondeterminism. 1 + 2 = 3, sure, but what’s [1,5] “+” [2,7]?

Let’s make our own lists.

data List a = Empty | Cons a (List a) deriving ShowYou have two options: the Empty list, and a list that’s made of an element of type a consed onto another List a. It belongs to the Show type class so that we can print out values.

Prelude> lst = Cons 'a' (Cons 'b' (Cons 'c' Empty))

Prelude> :t lst

lst :: List CharHopefully the similarity to Maybe is more clear!

Mapping is a big deal. A lot of work reduces to mapping a computation over a list, or some more complicated structure. But we just said that lists are only one type of “effect” or “context” or “structure”. How do we extend something like map to be useful over more general structures beyond lists?

What does that mean? Well, think of map like a “microscope”, or think of yourself as “unboxing” a structure when you use it. When you map a function over a list, you are opening up the list and applying the function to it one element at a time. map lets you access the inner structure of your data type.

We can make something like map for Maybe.

maybeMap :: (a -> b) -> Maybe a -> Maybe b

maybeMap f Nothing = Nothing

maybeMap f (Just x) = Just (f x)

> maybeMap (\x -> x + 1) (Just 5)

Just 6Notice how we “drilled into” the type to do operations on Int inside.

Let’s make a map for our List a type:

mymap :: (a -> b) -> List a -> List b

mymap f Empty = Empty

mymap f (Cons x xs) = Cons (f x) (mymap f xs)

> mymap (\x -> x * 10) (Cons 1 (Cons 2 (Cons 3 Empty)))

Cons 10 (Cons 20 (Cons 30 Empty))In Haskell, this generalized map has a name: fmap. Types that have an fmap belong to a type class called Functor. Here’s how that type class might look:

class Functor ff where

fmap :: (a -> b) -> ff a -> ff bHaskell already knows that Maybe is a Functor:

Prelude> fmap (+1) (Just 3)

Just 4Let’s make our List into a Functor:

instance Functor List where

fmap g Empty = Empty

fmap g (Cons x xs) = Cons (g x) (fmap g xs)Let’s look at a more complicated and useful type, that of a binary tree:

data Tree a = Leaf a | Node (Tree a) (Tree a) deriving ShowA binary tree is either a leaf holding a value or it’s a node with a left and right subtree.

Prelude> tree = Node (

Node

(Leaf "abcde")

(Leaf "b")) (

Node

(Leaf "zazy")

(Leaf "zxxxxaaa")

)

Prelude> :t tree

tree :: Tree [Char]We can make Tree into a Functor:

instance Functor Tree where

-- fmap :: (a -> b) -> Tree a -> Tree b

fmap g (Leaf x) = Leaf (g x)

fmap g (Node l r) = Node (fmap g l) (fmap g r)

> fmap length tree

Node (

Node

(Leaf 5)

(Leaf 1) (

Node

(Leaf 4)

(Leaf 8)

)length has type String → Int, and we fmapped it over a Tree String and got a Tree Int.

Functors must satisfy two laws:

fmap id = id

fmap f . fmap g = fmap (f . g)Another way to look at functors: they lift a function “up” into a context or effect. If (+1) is “function that adds 1”, and we want to fmap it across a Maybe, then fmap (+1) is “function that increments with potential failure”. Another way of saying this is to look at types. If the function has type a → b, then the fmapped-function has type ff a → ff b, where ff is the Functor, such as Maybe, [a], List, Tree, etc.

Monads are sort of a joke in the Haskell community. They have a reputation for being hard to teach, and have spawned countless attempts to teach them. We’re going to talk about them with “effectful programming” in mind.

Say you want to define a calculator, but all it can do is divide numbers. Its type would something like

data Expr = Value Int | Div Expr Expr Evaluating this expression can be done with this eval function.

eval :: Expr -> Int

eval (Value x) = x

eval (Div x y) = eval x `div` eval yUh-oh. But what if we divide by zero? Maybe we should use our safeDiv function from before.

eval :: Expr -> Maybe Int

eval (Value x) = Just x

eval (Div a b) = case eval a of -- "first context"

Nothing -> Nothing

Just m -> case eval y of -- "second context"

Nothing -> Nothing

Just n -> safeDiv m nThis is right but, oof, it’s ugly. We test for the failure effect of Maybe twice. Can we extract that functionality out? What are we doing here? We look at the Maybe value. If it’s a success, we want to pluck out the value from within Just, perhaps doing something with it later. Once we’re done But we want our computation to be part of a sequence, with later computations depending on previous. Notice how time has crept into our pure functional programming.

Since we’re creating a chain of computations, we want to both unwrap the value from its context and repackage it up once we’re done so that the next “link” can use it. We need something that looks like this:

bind :: Maybe a -> (a -> Maybe b) -> Maybe bThis is a weird type, but it takes 1) a contextual value, 2) a function that wraps a “bare” value into a context somehow, and returns the result of the sequence. This function is often called “bind” because it binds to the subsequent part of the computation.

Haskell people write “bind” as an infix function, >>=. (In fact, this is part of the Haskell logo. The other part is the lambda from lambda calculus.)

For our problem here, let’s define bind as

mx >>= f = case mx of

Nothing -> Nothing

Just x -> f xIt seems clear that we just yanked out the “Maybe” logic from the above expression into this new function. If we use lambdas, we can see the chain more clearly.

eval :: Expr -> Maybe Int

eval (Value x) = Just x

eval (Div a b) = eval a >>= \m -> eval b >>= \n -> safeDiv m n

-- reformat for clarity

eval (Div a b) = eval a >>= \m ->

eval b >>= \n ->

safeDiv m nThis chain of binds and lambdas looks a lot like some imperative language. In fact, Haskell has a shorthand for this: the do block:

eval (Div a b) = do

m <- eval a

n <- eval b

safeDiv m nWe say that this function is monadic. Monads are a type class; and every monad is a functor. Every monad has to implement two functions:

class Monad where

(>>=) :: m a -> (a -> m b) -> m b

return :: m aI’m sorry about the name return. All return does is take a bare value and “lift” it into the context in some sort of minimal way. For Maybe, return is the same thing as Just. For lists, it could be either the singleton list or a repeating list with the value repeated forever.

Haskell uses monads for effectful programming. The biggest side effects we deal with are things like printing to screen, writing or reading from a file, anything that goes out into the world and spoiling our walled-garden of mathematical purity. But it turns out that IO, the type that handles such things, is a monad. I’m sparing you the nitty-gritty, but this is how Haskell does useful things.

So “real world Haskell” often looks like this:

readlines path = do

contents <- readFile path

let somethingYouWant = ... -- function over String

return somethingYouWantThe type of readFile is IO String. You can think of IO String as “some data that’s been out there from the real world, tainted with imperfection”. But despite this, you can still compute with the data it’s wrapped with these monadic functions.

Once of the hidden gems about functional programming is being able to prove things about your programs in the same way you prove things in math. Fortunately, the kind of proof required in Haskell is not as complicated as a mathematical proof.

Let’s take the reverse function, acting on a list. How would you reverse a list? Well, let’s go back to our two-step process:

reverse :: [a] -> [a]

reverse [] = []

reverse (x:xs) = reverse xs ++ [x]

reverse [1,2,3] =

reverse [2,3] ++ [1] =

reverse [3] ++ [2] ++ [1] =

reverse [] ++ [3] ++ [2] ++ [1] =

[] ++ [3] ++ [2] ++ [1] =

[3,2,1]It works!

Let’s say we want to prove that reverse is its own inverse:

reverse . reverse = id or reverse (reverse xs) = reverse xs

Empty case:

reverse (reverse []) =

reverse [] =

[] Non-empty case:

reverse (reverse (x:xs)) =

reverse (reverse xs ++ [x])Uh-oh. We’re stuck. Now we have a lemma to prove before we can finish our proof. What happens when you reverse a concatenation?

reverse (xs ++ ys) = ?How does (++) work again?

[] ++ ys = ys

(x:xs) ++ ys = x : (xs ++ ys)So (++) sticks one element from xs onto ys, one at a time. Thus it’s a linear (O(n)) operation. More on that later.

How does reverse work on a concatenation? Based on its definition, we might think that it reverses them:

reverse (xs ++ ys) = reverse ys ++ reverse xsLet’s prove this by induction. First the empty case:

reverse ([] ++ ys) =

reverse ys -- definition of [] ++ ys

= reverse ys ++ reverse [] -- right hand side

= reverse ys ++ [] --definition of reverse []

= reverse ys -- definition of appending []Now the non-empty case:

reverse ((x:xs) ++ ys) =

reverse (x:(xs++ys)) -- definition of (++)

= reverse (xs++ys) ++ [x] -- definition of reverse

= reverse ys ++ reverse xs ++ [x] -- induction hypothesis

= reverse ys ++ reverse ((x:xs)) -- definition of reverseSo it works! Now we can use it in our original proof.

reverse (reverse (x:xs)) =

reverse (reverse xs ++ [x]) =

reverse [x] ++ reverse (reverse xs)

[x] ++ reverse (reverse xs) -- assumption to be proven

[x] ++ xs -- induction hypothesis

x:xsWhat’s the reverse of a singleton list? Can we prove that reverse [x] = [x]?

reverse [] = [] --check

reverse [x] =

reverse (x:[]) =

reverse [] ++ [x]

[] ++ [x]

[x]Check! And we’re done.

Was that impressive? Maybe you think this proof business is all unnecessary. Isn’t it enough to implement the algorithm and just test for the edge cases? Well, testing will always be important (even in Haskell. Don’t let anyone tell you otherwise), but being able to reason about functions and their behavior is a very powerful concept, and maybe someday it will be as common place as syntax highlighting in IDEs. To me, this is a very test-driven approach. Before you even write down how reverse works, you could demand that it be its own inverse and have a compiler check that for you automatically.

Notice how much of this focused on the “pure” functional approach. I can make these kinds of statements because Haskell uses immutable values.

However, we may still produce inefficient code! Our implementation of reverse is very slow on long lists; it goes like O(n^2). We are appending elements of a list n times, and append itself takes O(n) times. That stinks. In Python or C or Java right now, you could right an O(n) time algorithm. Is Haskell beautiful but useless?

To fix this, we’re going to use what I think is the coolest feature of functional languages: to calculate a better function. What we want is a better reverse, call it reverse', that combines the old definition with append:

reverse_helper xs ys = reverse xs ++ ysWhy this? Well, if we can define this, then our new function could be

reverse' xs = reverse_helper xs []The second slot in reverse_helper is called an accumulator, and this is a common strategy to improve efficiency by accumulating intermediate results. Let’s define it by induction.

reverse_helper [] ys

= reverse [] ++ ys

= [] ++ ys

= ys

reverse_helper (x:xs) ys

= reverse (x:xs) ++ ys -- definition of reverse_helper

= reverse xs ++ [x] ++ ys -- definition of reverse

= reverse_helper xs ([x] ++ ys) --induction hypothesis

= reverse_helper xs (x:ys) -- definition of appendSo now we have a new function with no linear-time append cost!

reverse xs = reverse_helper xs []

where reverse_helper xs ys = case xs of

[] -> ys

(x:xs) -> reverse_helper xs (x:ys)We’ve used a naïve but easy-to-understand definition to bootstrap ourselves into a less-clear but more performant version! Sounds like what a compiler does: translating high-level code into low-level code for a computer.

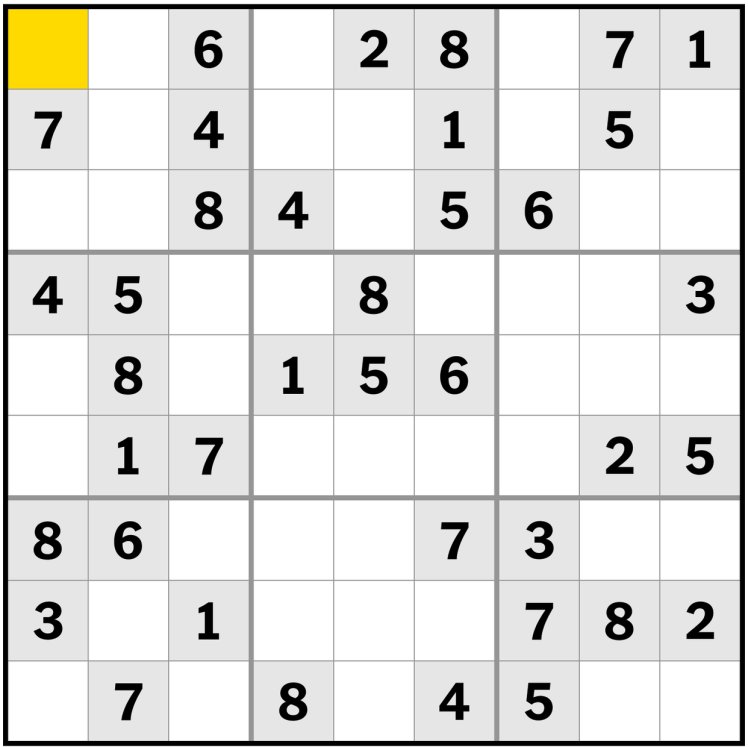

数独 (suu-doku, “number” + “solitary”) is a puzzle with 9 symbols and a 9 x 9 grid. Each symbol must appear exactly once in each row, column, and 3 x 3 box. You’re given a grid and have to fill in the remaining symbols subject to those constraints. Here’s the New York Times easy puzzle for August 19, 2021:

Usually you use the digits 1 - 9 for the symbols, but you can use any distinct nine symbols. Let’s start by defining some types. We’ll represent a row as a list of the characters “1” through “9”, and the full Sudoku grid as a list of rows.

-- matrix is a "list of lists"

digits = ['1' .. '9']

type Row a = [a]

type Matrix a = [Row a]

type Digit = Char

-- Sudoku grids are 9 x 9 grids

type Grid = Matrix DigitWe’ll let the character “0” represent a blank square.

-- blanks are '0'

blank :: Digit -> Bool

blank = (== '0')Here’s the above example in our representation.

*Main> game = [ ['0','0','6', '0','2','8', '0','7','1'],

['7','0','4', '0','0','1', '0','5','0'],

['0','0','8', '4','0','5', '6','0','0'],

['4','5','0', '0','8','0', '0','0','3'],

['0','8','0', '1','5','6', '0','0','0'],

['0','1','7', '0','0','0', '0','2','5'],

['8','6','0', '0','0','7', '3','0','0'],

['3','0','1', '0','0','0', '7','8','2'],

['0','7','0', '8','0','4', '5','0','0'] ] :: Grid

If a space is blank, then it could hold any digit 1 - 9. So we create a function that represents what possible numbers can be filled into each space.

choice :: Digit -> [Digit]

choice digit = if blank digit then digits else [digit]

choices :: Grid -> Matrix [Digit]

choices = map (map choice)For our test puzzle, this looks like:

*Main> choices game

[["123456789","123456789","6","123456789","2","8","123456789","7","1"],

["7","123456789","4","123456789","123456789","1","123456789","5","123456789"],

["123456789","123456789","8","4","123456789","5","6","123456789","123456789"],

["4","5","123456789","123456789","8","123456789","123456789","123456789","3"],

["123456789","8","123456789","1","5","6","123456789","123456789","123456789"],

["123456789","1","7","123456789","123456789","123456789","123456789","2","5"],

["8","6","123456789","123456789","123456789","7","3","123456789","123456789"],

["3","123456789","1","123456789","123456789","123456789","7","8","2"],

["123456789","7","123456789","8","123456789","4","5","123456789","123456789"]]Recall that ”123” is a list of Char in Haskell. You may be confused at the definition of choices, which is map (map choice). Remember to think of map as a “microscope” into a structure. So if our grids are two levels deep (lists of lists), you need two maps to see inside.

We take the above choices and build a grid for each of the possible combination of those cells. This is called the Cartesian product of many different sets:

cp [] = [[]]

cp (xs:xss) = [x:ys | x <- xs, ys <- yss] where yss = cp xss

> cp [ [1,2], [3], [7,8]]

[[1,3,7],[1,3,8],[2,3,7],[2,3,8]]How many grids are we talking about here? Let’s say you are given 23 of the available 81 squares filled in. Since you can put 9 values in all the blanks, this will generate 9^58 grids! Of course, most of these aren’t legal because there will be duplicates in most, so either we 1) generate fewer grids or 2) learn how to prune them really well. Let’s start with option #2.

Assume we have a list of list of strings like the following:

[ ["12", "45"], ["ab", "de"] ]We want to generate every possible 2 x 2 “grid” from these choices. Observe:

*Main> map cp [ ["12", "45"], ["ab", "de"] ]

[["14","15","24","25"],["ad","ae","bd","be"]]

*Main> cp . map cp $ [ ["12", "45"], ["ab", "de"] ]

[["14","ad"],["14","ae"],["14","bd"],["14","be"],

["15","ad"],["15","ae"],["15","bd"],["15","be"],

["24","ad"],["24","ae"],["24","bd"],["24","be"],

["25","ad"],["25","ae"],["25","bd"],["25","be"]]The function cp . map cp gives us every “expansion” of characters from our choices, so:

expand = cp . map cpOkay, so we know how to generate all the grids. Our final answer should be something like

solve = filter valid . expand . choicesWe generate the grids and then filter the legal or valid ones. We’re going to need a “no duplicates” function:

nodups [] = True -- no duplicates in an empty list!

nodups (x:xs) = all (/= x) xs && nodups xsOur valid function should look like

valid :: Grid -> Bool

valid grid = all nodups (rows grid) &&

all nodups (cols grid) &&

all nodups (boxs grid)We just need to implement rows, cols, and boxs.

rows is easy. Our representation of grids is just a list of lists. So our grids are already lists of rows!

rows = id -- identity functioncols is harder because we need the transpose. The trick here is to change a row like [1,2,3] into [[1], [2], [3]], its column representation, and them zip all of those column’d lists together with cons.

cols [xs] = [[x] | x <- xs]

cols (xs:xss) = zipWith (:) xs (cols xss)boxs is more complicated. First, we group the grid into groups of three:

group [] = []

group xs = take 3 xs : group (drop 3 xs)Then we transpose them with cols, and then concatenate them (ungrouping them):

ungroup = concat

boxs = ungroup . map ungroup .

map cols .

group . map groupRemember that f . map f means you want to map f to the “inner” structure, and then apply f to the “outer” structure. In this case we have lists of lists.

This is a full solution! But it’s inefficient. We could be pruning grids much earlier. In particular, if squares are already filled in, we can eliminate that value in its row, column, and grid. The pruneRow function does this:

pruneRow :: Row [Digit] -> Row [Digit]

pruneRow row = map (remove fixed) row where fixed = [d | [d] <- row]

remove :: [Digit] -> [Digit] -> [Digit]

remove ds [x] = [x]

remove ds xs = filter (`notElem` ds) xsHere’s how pruneRow looks in practice:

pruneRow [ ['3'],['6'], ['1'], ['3','4'], ['1', '3', '5', '6','8'], ['9']]

["3","6","1","4","58","9"]3, 6, and 1 are “already filled in”, so they can be pruned from the other choices. With a bit of calculation as with reverse earlier, we can derive a prune function. It’s only about 10 lines of derivation, but it’s pretty intense, so I’ll skip to the punchline:

pruneBy f = f . map pruneRow . f

prune = pruneBy boxs . pruneBy cols . pruneBy rows

many f x = if x == y then x else many f y where y = f x

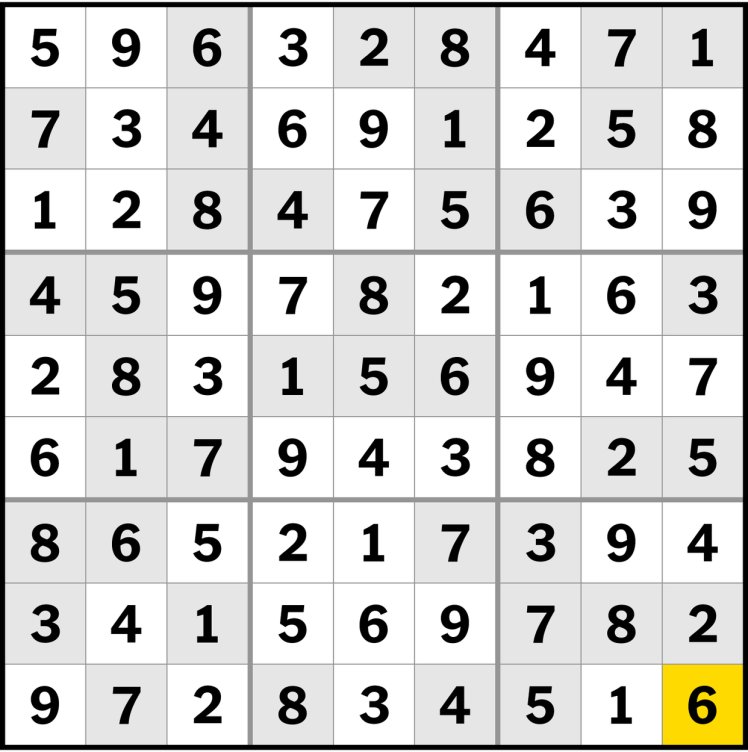

solve = filter valid . expand . many prune . choicesThis is the final solve function. It solves the easy puzzle almost immediately:

*Main> solve game

[["596328471",

"734691258",

"128475639",

"459782163",

"283156947",

"617943825",

"865217394",

"341569782",

"972834516"]]

This approach won’t work on harder puzzles with fewer filled-in values, but that’s fine! With some changes, those can be solved too--and they can be solved using the same “calculational” techniques that I showed earlier!

Reference: Sudoku images, Thinking Functionally With Haskell, by Richard Bird